Custom object detection using YOLOv8

Category : Computer Vision | Sub Category : Posted on 2024-05-14 13:10:52

Introduction

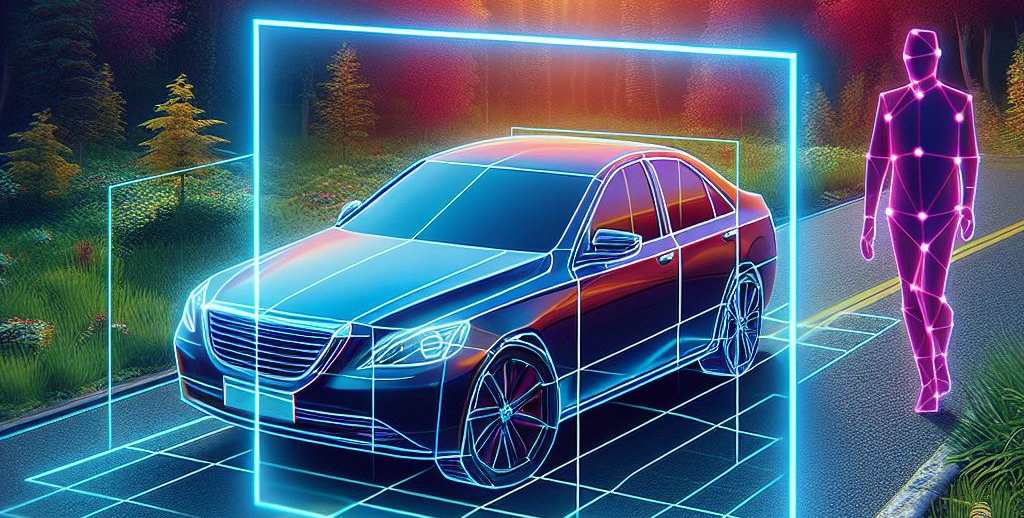

Welcome to our comprehensive guide on mastering YOLOv8 for custom object detection training. Whether you are an experienced data scientist or a newcomer to the field of computer vision, this guide is tailored to provide you with essential insights into leveraging the power of YOLOv8 for your specific needs. Object detection is a critical component in numerous applications spanning surveillance, security, autonomous vehicles, and industrial automation. Recognized for its exceptional speed and accuracy, YOLOv8, an abbreviation for You Only Look Once version 8, stands as a cutting-edge deep learning model in the realm of object detection in both images and videos. In this blog post, we embark on a journey to explore the fundamentals of YOLOv8, guiding you through practical steps to train custom models that align precisely with your objectives. Whether you aim to enhance your expertise as a seasoned professional or are keen to expand your knowledge as an enthusiast, this guide equips you with the necessary tools to succeed in your object detection endeavors. Join us as we delve into the intricacies of YOLOv8 and unlock its full potential together.Understanding YOLov8:

YOLOv8 is an advanced object detection algorithm that stands for "You Only Look Once." It's a deep learning model known for its exceptional speed and accuracy in identifying objects within images and videos. Unlike traditional object detection methods that rely on multiple stages and complex processing pipelines, YOLOv8 processes the entire image in a single pass, making it incredibly efficient.

Key Features of YOLOv8:

1. Single-Shot Detection: YOLOv8 can detect objects in real-time with just one pass through the neural network, making it suitable for applications requiring fast and accurate detection, such as autonomous driving and surveillance systems.

2. Anchor-Based Detection: YOLOv8 employs anchor boxes to predict bounding boxes for objects of different sizes and aspect ratios within an image. This enables robust detection of objects regardless of their scale or orientation.

3. Feature Pyramid Network (FPN): YOLOv8 utilizes an FPN architecture to extract multi-scale features from the input image, allowing it to detect objects at various resolutions and levels of detail.

4. Darknet-53 Backbone: YOLOv8 is built upon the Darknet-53 convolutional neural network architecture, which serves as a powerful feature extractor. The Darknet-53 backbone enables YOLOv8 to learn rich representations of visual data, enhancing its detection performance.

Custom Training Data Preparation:

To ensure the effectiveness of YOLOv8 for custom object detection tasks, it's crucial to prepare high-quality training data. This involves collecting, annotating, and preprocessing datasets tailored to your specific application.

YOLOv8 Dataset Structure:

Before delving into data preparation, it's essential to understand the typical structure of datasets used with YOLOv8. The dataset should consist of:

A yaml file :

this yaml file contains

• Path to image folders of training,validation,test folders

• Number of classes

• Class number with its corresponding names

• Additionally, it can include the dataset download link and ownership information.

Annotation folders:

It contains annotations of each images each folder like train,test,val contains an image folder and annotation folder

the annotations were on text file and the structure was

0 0.481715 0.634028 0.690625 0.713278

0 0.741094 0.524306 0.314750 0.933389

27 0.364844 0.795833 0.078125 0.400000

0 0.741094 0.524306 0.314750 0.933389

27 0.364844 0.795833 0.078125 0.400000

• class number

• middle point of object (x)

• middle point of object (y)

• width of object from the middle point

• height of object from the middle point

So, in mathematically total width of object is width + middle point of object (x)

and total height of object is height + middle point of object (y)

An image folder:

It contains images with object

| ─ ─ images /

| | ─ ─ train/

| | | ─ ─ 000001.jpg

| | | ─ ─ 000008.jpg

| | | ─ ─ 0000032.jpg

| | ─ ─ val/

| | | ─ ─ 000003.jpg

| | | ─ ─ 000007.jpg

| | | ─ ─ 0000010.jpg

| | ─ ─ test/(optional)

| | | ─ ─ 000006.jpg

| | | ─ ─ 000009.jpg

| | | ─ ─ 0000017.jpg

| ─ ─ labels /

| | ─ ─ train/

| | | ─ ─ 000001.jpg

| | | ─ ─ 000008.jpg

| | | ─ ─ 0000032.jpg

| | ─ ─ val/

| | | ─ ─ 000003.jpg

| | | ─ ─ 000007.jpg

| | | ─ ─ 0000010.jpg

| | ─ ─ test(optional)/

| | | ─ ─ 000006.jpg

| | | ─ ─ 000009.jpg

| | | ─ ─ 0000017.jpg

| ─ ─ data.yaml

Practical Example: Custom Detection with YOLOv8